ImPlot == Cool!

05/06/2021

I am currently looking at implementing meshing of point clouds using Radial Basis Functions (RBF) as outlined in the paper Reconstruction and Representation of 3D Objects with Radial Basis Functions by Carr et al. This paper uses the Fast Multipole Method with which I was unfamiliar. The paper points to a tutorial A short course on fast multipole methods. While trying to grok what is outlined in this tutorial, I wanted to plot some simple data. So, I started to look around for a 2D plotting package with C++ bindings. I didn't find a lot of great choices. First thing I looked at was Matplotlib, but this requires Python. Blech. My coworker then pointed me to ImPlot, which is built on top of ImGui (which I was already using for UI elements... see Dear ImGui). I have to say, for adding simple 2D plots to your app, its pretty nice. Below is a screen shot of the one of the real-time graph examples that comes with ImPlot.

Marching Cubes on the GPU

04/202/2021

I recently implemented marching cubes (MC) inside my Caustic renderer using compute shaders.

I started out with a pure CPU version, which is rather trivial to implement. See CMeshConstructor::MeshFromDensityFunction(). I then converted this CPU implementation into two different GPU implementations. The C++ side of these GPU versions are handled in CSceneMarchingCubesElem.cpp.

GPU Version #1

The first version is found in MCSinglePass.cs. The name of this file is a bit of a misnomer since my implementation actually calls this compute shader twice. The first time is only to retrieve the total number of vertices generated so that we can allocate a vertex buffer of the correct size. The second pass actually emits the vertices once we have allocated the vertex buffer. This version only outputs a vertex buffer (i.e. no index buffer), which means there is no vertex sharing.

GPU Version #2

This version enables vertex sharing by outputting both a vertex buffer and an index buffer. It is implemented as 3 separate compute shaders that are run as a pipeline with the following steps: MCCountVerts => MCAllocVerts => MCEmitVerts

- MCCountVerts.cs

This pass does two things. First it counts the total number of vertices that will be emitted by the final stage. Secondly, for each voxel it flags which vertices are referenced. Each voxel contains 8 values from the signed distance function (SDF) provided by the client. These SDF values indicate whether the voxel is considered to be inside or outside the surface. Since the SDF values are shared with neighboring voxels, each voxel only stores the SDF value for the 0th vertex (see Figure 1)

The other 7 values are read from neighboring voxels. From these 8 SDF values we have potentially 12 different vertices that intersect the surface. These vertices are located where the SDF value is 0. See Figure 2.

Again, since these vertices are shared with neighboring voxels, each voxel only emits vertices 0, 3, and 8 (assuming a polygon references them).

Updating of the total vertex count and setting a vertex's reference flag must use InterlockedAdd() and InterlockedOr() respectively. The reason is that multiple GPU threads may be attempting to update these values at the same time. The Interlocked...() calls ensure that the operation is atomic. The second key attribute that must be set is the globallycoherent modifier must be set on the variables we are calling Interlocked...() on. The reason for this is to ensure that writes to the variable are flushed across all GPU thread groups. - MCAllocVerts.cs

After the first stage runs we are able to allocate a vertex and index buffer of the correct size. This stage determines the index where each referenced vertex will reside in the vertex buffer. - MCEmitVerts.cs

This stage writes the actual data to the vertex and index buffers. Since we want to pass these output buffers as ID3D11Buffer to Draw() we can't declare them as RWStructuredBuffer. Thus we need to declare them as RWByteAddressBuffer.

Here is the final wireframe output

using the SDF function:

auto spherePDF = [](Vector3& v)->float

{

static Vector3 center(0.5f, 0.5f, 0.5f);

return (v - center).Length() - 0.5f;

};

NN visualizer

10/21/2020

I usually never run programs off the internet (way to easy to acquire a virus that way). Instead I just sandbox the app I want to run by firing up a VM. I recently started to clean out some of my old VHDs that were taking up space, when I ran across one called Netron. I couldn't remember what it was at first. It's a visualizer that shows you the structure of a neural network. This is super useful if you are taking some model say in PyTorch and want to convert it into Onnx, so you need to know what the names of the inputs/outputs are. The app can be found here:

https://github.com/lutzroeder/netron

Dear ImGui

02/09/2021

I recently had to build a demo for work and needed a GUI frontend. Generally my go to UI framework is Windows Presentation Foundation (WPF). It is super robust and covers pretty much everything you would want out of a UI Framework. The one giant downside is that its primarily geared towards being used with .NET languages such as C#. This in general means that you need to write the front end of your application in C# or C++/CLI and the interop down into native C++ for the bulk of your application. i.e. its a giant PITA.

WPF is being supplanted by WinUI. Unfortunately, at the time this still isn't quite up to snuff for building native C++ applications that aren't UWP centric (version WinUI 3.0 is still in Preview). Thus, I passed on trying this. For a good overview of the differences between WPF and WinUI see this excellent article: Building Modern Desktop Apps—Is WinUI 3.0 the Way to Go? (telerik.com).

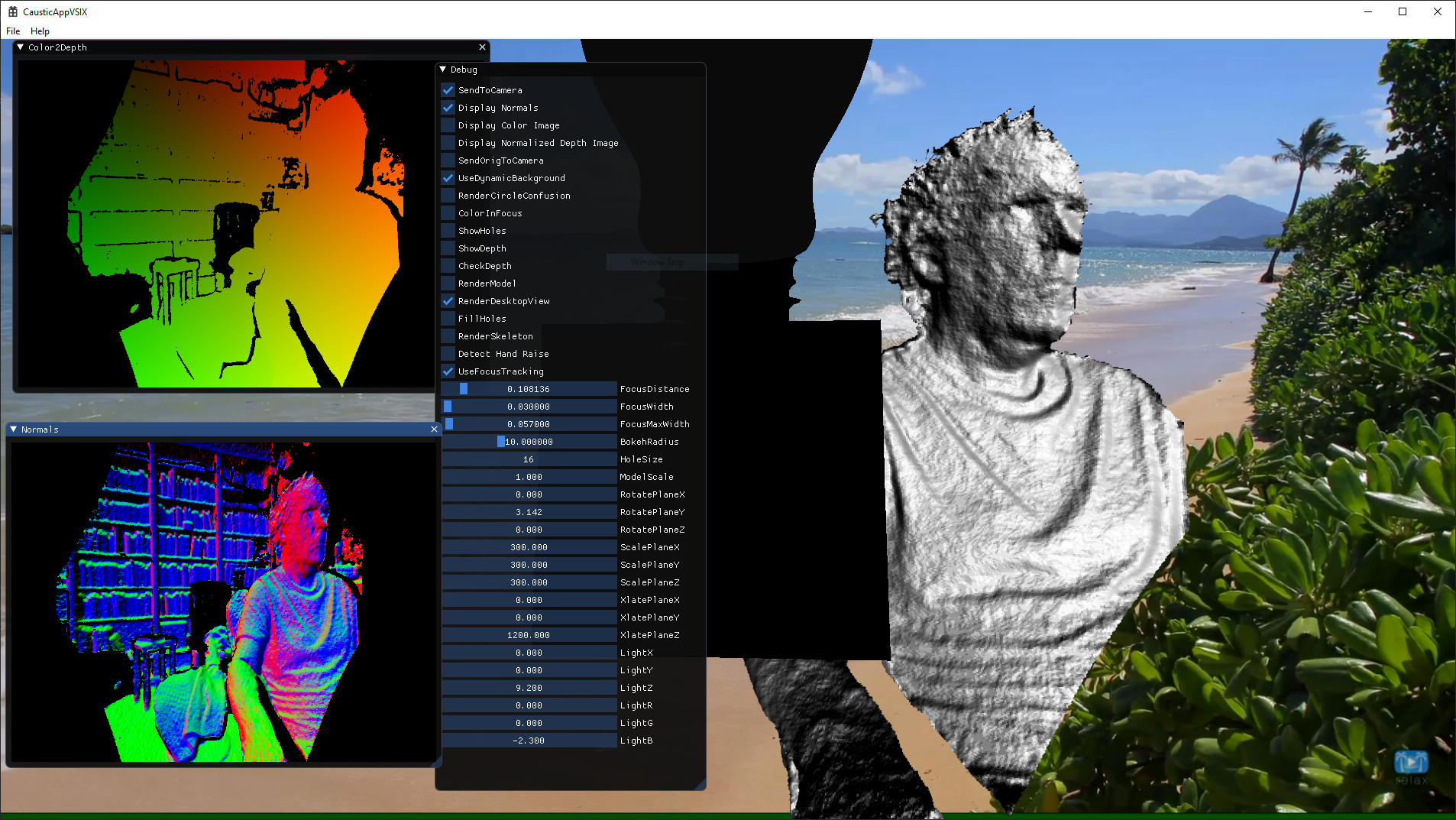

So, if I need to build a fully native application, my general go to framework for UI has been wxWidgets. Then my boss pointed me to Dear ImGui. When I first read how this works, I was super skeptical. It's a fully Immediate mode UI. i.e. every frame you recreate the entire UI and the application maintains all state. This sounds nuts. However, it's freaking awesome for building fast small demos. The thing I didn't think about is that you often end up indirectly maintaining state anyways by implementing callbacks that have to copy values from the underlying UI into your application anyways. It's way simpler just to have the UI write directly to the place you are going to copy the values to anyways. Also, much of the mundane work that would have to be implemented by the application (i.e. keyboard/mouse input, windowing support, etc) has been written for whatever rendering framework (i.e. DX11, DX10, ...) you are using. Thus you application can make a few simple calls during its rendering loop and get all this functionality. Below is some sample code from my demo along with a screen shot of the demo's output showing some of the UI elements possible (checkboxes, sliders, image windows, etc).

| Sample Code: |

| ImGui_ImplDX11_NewFrame(); ImGui_ImplWin32_NewFrame(); ImGui::NewFrame(); ImGui::Checkbox("SendToCamera", &app.sendToCamera); if (ImGui::IsItemHovered()) ImGui::SetTooltip("Should we send image to camera driver?"); ImGui::Checkbox("Display Normals", &app.displayNorms); ImGui::Checkbox("Display Color Image", &app.displayColorImage); ImGui::Checkbox("Display Normalized Depth Image", &app.displayDepthImage); ImGui::Checkbox("SendOrigToCamera", &app.sendOrigToCamera); |

Sample Output:

Simpler Reflection in C#

09/17/2020

As I mentioned in an earlier post, I wrote a visual editor for building \Psi programs. For most nodes in a \Psi program the inputs/outputs are known ahead of time. For instance, my editor exposes a node for performing a Gaussian blur. The inputs to that node is the image to be blurred. This makes writing the code for that node pretty simple:

var inputStreamName1 = this.inputs[0].Connections[0].Parent.GetOutputStreamName(this.inputs[0].Connections[0]);

Emitter<Shared<Microsoft.Psi.Imaging.Image>> emitter1 = ctx.connectors[inputStreamName1] as Emitter<Shared<Microsoft.Psi.Imaging.Image>>;

var he = Microsoft.Psi.OpenCV.PsiOpenCV.GaussianBlur(emitter1, (int)GetProperty("WindowSize"), (double)GetProperty("Sigma"));

ctx.connectors.Add(GetOutputStreamName(outputs[0]), he.Out);

As can be seen by the bolded text, I know that the emitter has a type Shared<Microsoft.Psi.Imaging.Image>.

However, for some operators, I don't know in advance what the type is. This is where I use C#'s reflection support to call the correct function. For instance, I have a node for \Psi's "return" generator that simply returns an arbitrary (type-wise) object as its output. In order to make this work, I have to run code similar to the following:

object[] args = { ctx.pipeline, value };

var methods = typeof(Generators).GetMethods();

foreach (var method in methods)

{

if (method.Name == "Return")

{

Type[] argTypes = new Type[] { value.GetType() };

var repeatMethod = method.MakeGenericMethod(argTypes);

object prod = repeatMethod.Invoke(null, args);

object outprod = prod.GetType().GetProperty("Out").GetValue(prod);

ctx.connectors.Add(GetOutputStreamName(outputs[0]), outprod);

break;

}

}

As shown the example above, we first need to walk across all the methods (GetMethods()) exposed by type Generators and find the correct method. We then need to make a generic method (MakeGenericMethod) using the types based on the value type. We then have to call the generic method using the arguments we supplied (Invoke()).

This works for the most part. Where this becomes super difficult is when the arguments to the method are multilevel templates and the method we are attempting to call is an extension method. The first problem is finding the correct extension method. We can do this by enumerating over the extension class to find the correct method using something like:

var ms = typeof(Microsoft.Psi.Operators).GetMethods();

foreach (var m in ms)

{

if (m.Name.Contains("Join"))

{

// Possibly use this method

}

}

One problem with this approach is that there can be many overloads of Join<> (e.g. Join<TPrimary,TSecondary>, Join(TPrimary,TSecondary1,TSecondary2> etc) and we need to find the correct one.

The second problem is that we might have to construct new types to pass to MakeGenericMethod. For instance, here is one of the signatures for one version of Join<>:

public static IProducer<(TPrimary, TSecondary)> Join<TPrimary, TSecondary>(

this IProducer<TPrimary> primary,

IProducer<TSecondary> secondary,

RelativeTimeInterval relativeTimeInterval,

DeliveryPolicy<TPrimary> primaryDeliveryPolicy = null,

DeliveryPolicy<TSecondary> secondaryDeliveryPolicy = null)

Notice that the first and second arguments are a template using the TPrimary/TSecondary template specification (i.e. IProducer<TPrimary>). Thus in order to call the generic method we need to do something like:

var q = typeof(IProducer<>);

var i0 = q.MakeGenericType(inputType0);

var i1 = q.MakeGenericType(inputType1);

var qd = typeof(DeliveryPolicy<>);

var q0 = qd.MakeGenericType(inputType0);

var q1 = qd.MakeGenericType(inputType1);

Type[] argTypes = new Type[] { i0, i1, typeof(RelativeTimeInterval), q0, q1};

var repeatMethod = m.MakeGenericMethod(argTypes);

The upshot of all this, is that using reflection to call heavily templatized methods is a royal PITA.

Luckily, there is a much simpler solution. Simpler create a new type! Then instantiate that object, which does all the heavy lifting. This way we avoid all the nested nastiness of types on the method we are trying to call. Below is the implementation for PsiEditorJoin<> using this pattern. This allows us to easily call Join() without worrying about all its various implementations. We let the compiler figure it out.

class PsiEditorJoin<T1,T2>

{

public void Preview(ref PreviewContext ctx, List<DispConnector> inputs, List<DispConnector> outputs, string outputStreamName)

{

var inName0 = inputs[0].Connections[0].Parent.GetOutputStreamName(inputs[0].Connections[0]);

var emitter0 = ctx.connectors[inName0] as Emitter<T1>;

var inName1 = inputs[1].Connections[0].Parent.GetOutputStreamName(inputs[1].Connections[0]);

var emitter1 = ctx.connectors[inName1] as Emitter<T2>;

var firstArg = emitter0.Join(emitter1, new RelativeTimeInterval(TimeSpan.FromSeconds(-1), TimeSpan.FromSeconds(1)));

ctx.connectors.Add(outputStreamName, firstArg.Out);

inputs[0].ValueType = typeof(T1);

inputs[1].ValueType = typeof(T2);

outputs[0].ValueType = typeof((T1, T2));

}

}

public override void Preview(ref PreviewContext ctx)

{

if (!visited)

{

visited = true;

base.Preview(ref ctx);

var inputType0 = this.inputs[0].Connections[0].ValueType;

var inputType1 = this.inputs[1].Connections[0].ValueType;

var d1 = typeof(PsiEditorJoin<,>);

System.Type[] typeArgs = { inputType0, inputType1 };

var objType = d1.MakeGenericType(typeArgs);

object o = System.Activator.CreateInstance(objType);

var previewMethodInfo = objType.GetMethod("Preview");

object[] parameters = { ctx, this.inputs, this.outputs, GetOutputStreamName(outputs[0]) };

previewMethodInfo.Invoke(o, parameters);

}

}

This is way cleaner and simpler to understand.

Page 2 of 5